Evolution of RTC audio

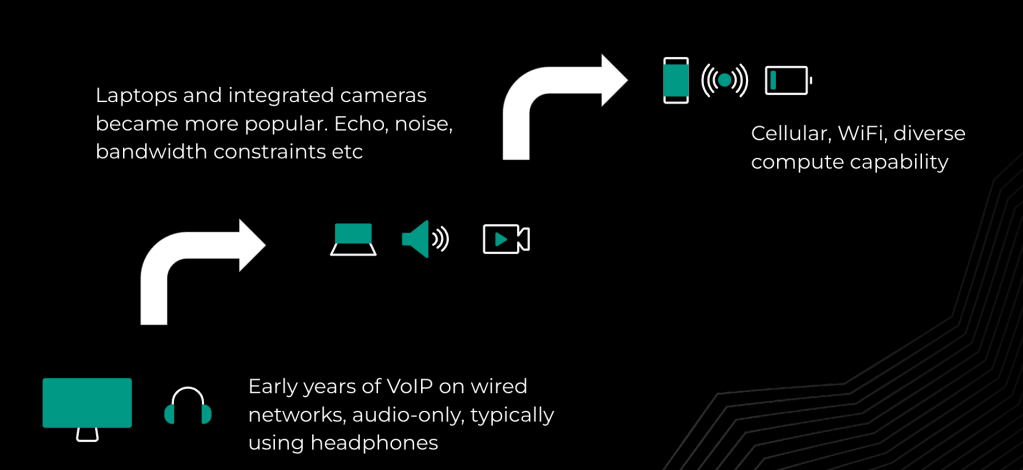

Real-time communication (RTC) has come a long way, from desktop audio-only calls on wired networks to communications anytime, anywhere on cellular networks. At the same time, these advances have also introduced new challenges in terms of both network and device. Whereas at the start of the voice-over-internet protocol (VoIP) revolution, users were excited just by the prospect of making a free international call, after more than two decades of familiarity, the modern user has high expectations for quality, and rightly so. As we seek to deliver the magic of being in the same shared space, new immersive scenarios are emerging in the Metaverse with even lower tolerance for distortions.

To deliver reliable high-quality, low-latency, immersive audio, the journey starts with making sure the foundational elements are in good working order. We don’t want to start every call asking the dreaded words, “Can you hear me?” Excessive latency in a call reduces interactivity, resulting in participants frequently talking over one another. Many calls take place over low-bandwidth cellular connections, and even the best WiFi networks experience occasional congestion.

Robust packet loss and jitter compensation is another important foundational element. And of course, no one likes to hear background noise or their own voice echoed, which necessitates high-quality full-duplex acoustic echo cancellation (AEC) and non-stationary noise suppression. Once these essentials are taken care of, the journey progresses towards enabling a high-quality experience—delivering music-quality, full-band stereo audio, taking us one step closer to “being there.” The next step in this journey is enabling immersive Metaverse audio experiences such as spatial audio, which is key for creating the magic of being in the same space. We’ll get to this at the end, but first let’s talk about the essentials.

Why is this a hard problem?

Audio in RTC is not new; it has been studied for several decades. So, why is this still a challenge? Billions of people use WhatsApp, Messenger, Instagram, and other apps, across a variety of devices and acoustic conditions. The characteristics of microphones and loudspeakers and the coupling between them, room reverberation and background noise, and the distance between mouth and microphone all differ widely—unlike building an audio pipeline on a single known device. To provide a good user experience, our solutions and their quality need to scale from low-CPU, low-bandwidth conditions at one end of the spectrum, to the most modern phones on 5G at the other end, so we can provide the best experience to our users based on their particular device and network. And importantly, users have a very low tolerance for audio distortions compared to distortions in other modalities. Even minor cutouts due to network conditions or disturbances due to echo and noise tend to be annoying, if not disruptive.

The audio pipeline

Meta’s RTC audio pipeline has its roots in WebRTC, which back in the day was a great way to bootstrap our solutions. Today, most audio components have been replaced with in-house equivalents to address our new use cases, both for the family of apps and the Metaverse.

Later in this blog, we will focus on the less-spoken-about but critical component—audio device management, both capture and render—to address audio reliability and the no-audio problem. Before that, for completeness, let’s quickly walk through the other components.

Thanks to machine learning (ML) and deep neural nets (DNN), acoustic echo cancellation (AEC) and noise suppression (NS) have seen significant progress in recent years, including within Meta’s products. While modern mobile devices feature built-in AEC and NS, most apps include software AEC and NS for devices that do not have hardware (HW) AEC/NS, or to provide an improved and consistent experience across devices. For AEC, there is a tradeoff between avoiding echo leaks and maintaining interruptibility, also known as double talk. A trivial solution would be to soft-mute the mic whenever there is signal activity on the loudspeaker—aka “walkie-talkie mode”—but the local talker wouldn’t be able to interrupt the far-end talker. Recent approaches involve a linear, filter-based canceller followed by a ML-based suppressor that preserves double talk. Our experience at Meta, where most of our users are on mobile devices, has shown us the need for a baseline low-compute version that can run across all devices, with more advanced models made available on more capable devices. For ML-based noise suppression, it is not trivial to decide what is and isn’t noise—that is for our users to decide. For example, dogs barking or a baby crying in the background may be desired sounds in certain contexts.

Automatic Gain Control (AGC) is essential to maintain normalized volume levels. For large group calls, AGC is also important when selecting active participants either in a selective forwarding unit (SFU) or multipoint control unit (MCU) model. Optional voice effects may be applied at this stage in the pipeline after echo cancellation, noise suppression, and gain control.

Next in the pipeline is the encoder. Low-bitrate encoding at high quality is another area that has received much attention in recent years and is key to operating in low-bandwidth networks. Despite advances in network technology, markets with the largest growth in monthly active users (MAUs) are also the ones with the most challenging bandwidth constraints, making this an important problem across the industry. Uncompressed speech at a super-wideband sampling rate of 32 kHz and 16 bits per sample requires a bitrate of 32000 * 16 = 512 kbps. Modern codecs are able to compress this to below 32 kbps with minimal loss in perceivable quality, with the newer ones targeting as low as 4 or 6 kbps. While it may be tempting to employ DNNs here, it’s important to note that calls in ultra-low bandwidth conditions typically tend to occur on devices with the most constrained CPU capabilities, requiring different solutions instead of heavy ML models.

The encoded bitstream is packetized, augmented with redundancy for error resilience as required, encrypted, and sent over the wire as RTP (real-time transport protocol) packets. On the receiver, components such as the jitter buffer, packet loss concealer, and decoder compensate for variations in packet arrival times as well as packet losses to ensure a low-delay, smooth playout. Some implementations include automatic gain control (AGC) in the render path to not rely on send-side AGC. Audio effects such as spatialization may be applied here before the signal is handed over to the render device manager for playout.

Audio device management

Now let’s zoom in on audio device management. This component is responsible for delivering audio samples from the microphone to the capture pipeline, and from the render pipeline to the loudspeaker. Components such as echo and noise control, codecs, and jitter buffer can be developed and tested at scale using a representative database of publicly available audio files and network traces. Audio-device management, however, requires physical device testing–which is notoriously difficult. Historically, audio device testing was done on a small set of devices in a lab setting. This quickly falls short, however, as Meta’s global scale makes it impossible to test all our users’ devices in a lab setting. We needed to innovate using telemetry to identify problems and their root causes, so we could fix and test them in production. Our commitment to end-to-end encryption and privacy means this had to be done without any audio data or any form of user-identifiable information. To achieve this goal, we built a fully privacy-compliant framework. At the end of a call, randomly selected users are requested to rate the call, with a follow-up survey for poorly rated calls. For calls where the user indicate they cannot hear audio or cannot be heard, our automated framework analyzes telemetry to identify potential reasons along the processing chain

Here’s an example of this framework in action. Let’s assume, hypothetically, that users report no-audio for one percent of all calls. We are now able to break down this one percent into distinct problem categories—capture, playback, transport-related, and so on. And we can then break down each category further into non-overlapping sub-categories that can be root-caused and fixed.

For instance, capture problems may be caused by a low signal level on my end or the remote end. Perhaps I am muted. There could be device initialization errors on either end due to incorrect configuration or device peculiarities. The audio device may stall mid-call and stop providing samples to the pipeline. And so on.

We will always have a percentage of problems categorized as unknown. In our experience, users sometimes interpret the survey choices differently, and rightly so; for instance, they’ll select “No audio” as the survey choice even when there has been only momentary loss of audio due to network conditions. These are still important to address, and we do so as part of our network-resilience efforts, but we may not want to include them as part of our no-audio problem. Now that we have validated our framework, we are able to rely on our technical indicators of no-audio to make continuous progress.

Based on the error codes we logged, we were able to “root-cause” and fix several issues using this breakdown. Pop-up notifications alerting the user they are muted addressed mute-related problems. Several no-audio problems were related to audio route management on Android or iOS—selecting the appropriate mode such as earpiece, speaker, bluetooth, wired headset, and so on. We also had to make users aware of the need to grant mic permissions for audio to flow. Audio devices can occasionally stall and stop providing samples from the microphone or stop rendering samples, and we were able to identify and mitigate these.

Another example is a no-audio bug we fixed on Instagram on iOS. When another app that also accesses the microphone is active (for instance, a video recorder), making a call on our Instagram app would result in no audio occasionally. This behavior makes sense when you receive a phone call while listening to music—the operating system interrupts the execution of the foreground application, and the audio session linked with this application gets deactivated until the interruption has ended. In our scenario, however, we found the Instagram app audio session to be deactivated even when the user explicitly displays intent by placing a call or answering a call. Once we found this root cause from our logging, the fix was simple—to disregard the interrupt when we are in an active session. This and many other fixes end up being very simple in hindsight—as they always are—but the key is having a good framework to uncover and root-cause such issues.

We continue to invest in this area, as no-audio is not just a minor annoyance but also a call-blocking problem.

Looking ahead

In traditional video conferencing, each participant is in a different room, and as we see everyone located in these different environments, to some extent our mental model accommodates resulting differences in audio quality. In a Metaverse setting where we aim to deliver the magic of being present in the same shared virtual space, the tolerance for various audio distortions is very low, since we don’t experience any of these when we are all physically together. As the participants are joining the call from their own rooms, each with their own unique acoustical characteristics that need to be compensated for, all of the problems we’ve discussed so far—echo, noise, latency, etc.—assume a new level of importance.

When talking about large calls with hundreds of participants, there are also problems of client and server scaling. Traditional methods of audio capping will not work in such a context, where you want to hear not only the conversation you are part of but also the other conversations happening on the side, and other sounds in the virtual environment, just like in real life. While generating ambisonic mixes on the server can address the client bandwidth problem, spatial accuracy will be limited. Sending dozens of mono streams to each client to be further spatialized will strain the client’s bandwidth. We’ll need an intelligent, proximity-based hybrid approach that strikes a good balance between scalability and quality.

These are exciting times for audio, where we need not only to solve traditional problems with renewed vigor but also innovate to deliver new immersive experiences.