Additional Authors: Betty Ni, Bo Feng, Howard Lu, Kevin Liu, Kushal Adhvaryu, and Qianqian Zhong

In this blog post, we will explain the end-to-end systems Facebook app leverages to deliver relevant content to users. We will cover our video-unification efforts that have simplified our product experience and infrastructure, some in-depth mobile-delivery details, and some new features we are working on in our video-content delivery stack. If you come across any terms you are unfamiliar with, please refer to the Glossary of Terms at the end of the post.

The end-to-end delivery of highly relevant, personalized, timely, and responsive content comes with complex challenges. At Facebook’s scale, the systems built to support and overcome these challenges require extensive trade-off analyses, focused optimizations, and architecture built to allow hundreds of engineers to push for the same user and business outcomes.

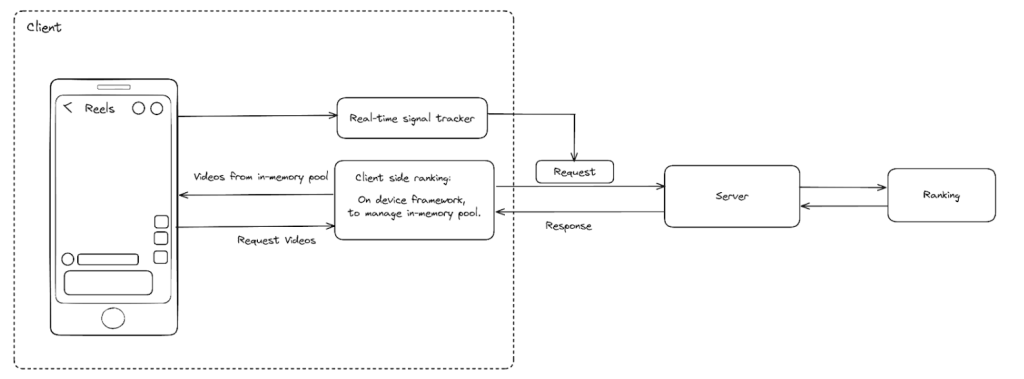

In its simplest form, we have three systems to support Facebook video delivery: ranking, server, and mobile.

- Ranking (RecSys): Recommends content that fulfills user interests (i.e., short term, long term, and real time) while also allowing for novel content and discovery of some that are outside the user’s historical engagement. The architecture supports flexibility for various optimization functions and value modeling, and it builds on delivery systems that allow for tight latency budgets, rapid modeling deployments, and bias towards fresh content.

- Server (WWW): Brokers between mobile/web and RecSys, which serves as the central business logic that powers all of Facebook (FB) Video’s feature sets and consumes the appropriate content recommendation through RecSys to the mobile clients. It controls key delivery characteristics such as content pagination, deduplication, and ranking signal collection, and it manages key systems trade-offs such as capacity through caching and throttling.

- Mobile (fb4a/fbios): Facebook’s mobile apps are highly optimized for user experience beyond the pixels. Mobile is built with frameworks, such as client-side ranking (CSR), which allows for delivery of the content that’s most optimal to the users at exactly the point of consumption, without needing a round trip to the server at times.

Video unification

Why unify?

It would be hard to talk about Facebook’s video delivery without mentioning our two years of video-unification efforts. Many of the capabilities and technologies we will reference below would not have been possible without the efforts taken to streamline and simplify Facebook video products and technical stacks.

Let’s dive in. Previously, we had various user experiences, mobile client layers, server layers, and ranking layers for Watch and Reels. In the past couple of years, we have been consolidating the app’s video experiences and infrastructure into a single entity.

The reason is simple. Maintaining multiple video products and services leads to a fragmented user and developer experience. Fragmentation leads to slower developer times, complicated and inconsistent user experiences, and fewer positive app recommendations. And Watch and Reels—two similar products—were functioning quite separately, which meant we couldn’t share improvements between them, leading to a worse experience across the board.

This separation also created a lot of overhead for creators. Previously, if creators wanted distribution to a certain surface, they would need to create two types of content, such as a Reel for immersive Reels surfaces and a VOD for Watch Tab. For advertisers, this meant creating different ads for different ad formats.

Complexity of mobile and server technical-stack unification

The first step in unification was unifying our two client and server data models and two architectures into one, with no changes to the user interface (UI). The complexity here was immense, and this technical-stack unification took a year across the server, iOS, and Android, but was a necessary step in paving the way for further steps.

Here are some variables that added to the complexity:

- Billions of users for the product. Any small, accidental shift in logging, UI, or performance would immediately be seen in top line metrics.

- Tens of thousands of lines of code per layer across Android, iOS, and server.

- Merging Reels and Watch while keeping the best of both systems took a lot of auditing and debugging.

- We needed to audit hundreds of features and thousands of lines of code to ensure that we preserved all key experiences.

- The interactions between layers also needed to be maintained while the code beneath them was shifting. Logging played a key role in ensuring this.

- Product engineers continued work on both the old Reels and Watch systems, improving the product experience for users and improving key video metrics for the Facebook app. This created a “moving goal post” effect for the new unified system, since we had to match these new launches. We had to move quickly and choose the right “cutoff” point to move all the video engineers to work on the new stack as early as possible.

- If we transferred the engineers too early, approximately 50 product engineers would not be able to hit their goals, while also causing churn in the core infrastructure.

- If we transferred them too late, even more work would be required on the new, unified infrastructure to port old features.

- Maintenance of logging for core metrics for the new stack. Logging is extremely sensitive and implemented in different ways across surfaces. We had to sometimes re-implement logging in a new way to serve both products. We also had to ensure we maintained hundreds of key logging parameters.

- We had to do all of this while maintaining engagement and performance metrics to ensure the new architecture met our performance bar.

Complexity of migrating Watch users to a Reels surface

The next step was moving all of our VOD feed-chaining experiences to use the immersive Reels UI. Since the immersive Reels UI was optimized for viewing short-form video, whereas VOD feed-chaining UI was optimized for viewing long-form video, it took many product iterations to ensure that the unified surface could serve all of our user’s needs without any compromises. We ran hundreds of tests to identify and polish the most optimal video feature set. This project took another year to complete.

Complexity of unifying ranking across Reels and Watch

The next step, which shipped in August of 2024, was unifying our ranking layers. This had to be done after the previous layers, because ranking relies on the signals derived from the UI across surfaces being the same. For example, the “Like” button is on top of the vertical sidebar and quite prominent on the Reels UI. But on the Watch UI, it is on the bottom at the far left. They are the same signal but have different contexts, and if ranking treated them equally you would see a degradation in video recommendations.

In addition to UI unification, another significant challenge is building a ranking system that can recommend a mixed inventory of Reels and VOD content while catering to both short-form video-heavy and long-form video-heavy users. The ranking team has made tremendous progress in this regard, starting with the unification of our data, infrastructure, and algorithmic foundations across the Reels and Watch stacks. They followed with the creation of a unified content pool that includes both short-form and long-form videos, enabling greater content liquidity. The team then optimized the recommendation machine-learning (ML) models to surface the most relevant content without video-length bias, ensuring a seamless transition for users with different product affinities (e.g., Reels heavy versus Watch heavy) to a unified content-recommendation experience.

Unified Video Tab

The last step was shipping the new Video Tab. This tab uses a Reels immersive UI, with unified ranking and product infrastructure across all layers to deliver recommendations ranging from Reels, long-form VOD, and live videos. It allows us to deliver the best of all worlds from a UI, performance, and recommendations perspective.

With video unification nearly completed, we are able to accomplish much deeper integrations and complex features end to end across the stack. Read more in the section below about these features.

Video unification precursor

Before any formal video unification occurred across the Facebook app, we made a smaller effort within the video organization to modernize the Watch UI. When you tap on a video, the Watch Tab will open a new video-feed modal screen. This leads to surfaces within surfaces that can be a confusing experience. Watch Tab is also closer to the News Feed UI, which doesn’t match modern immersive video products in the industry.

This project worked to make the Watch Tab UI immersive and modern, while also flattening the feeds within feeds into a single feed. The issue was that we had not consolidated our infrastructure layers across mobile, server, and ranking. This led to slowdowns when trying to implement modern recommendation features. We also realized too late that ranking would play a key role in this project, and we made ranking changes late in the project life cycle.

These key learnings allowed the video organization to take the right steps and order of operations listed above. Without the learnings from this project, we might not have seen a successful video-unification outcome.

Mobile delivery principles

- Prioritize fresh content.

- Let ranking decide the order of content.

- Only vend content when needed, and vend as little as possible.

- Ensure your fetching behavior is deterministic.

- Give the user content when there is a clear signal they want it .

Life cycle of a video-feed network request

Video Feed Network Request End to End(Image source)

Mobile client sends request

The mobile client generally has a few mechanisms in place that can trigger a network request. Each request type has its own trigger. A prefetch request is triggered a short time after app startup. A head load is triggered when the user navigates to the surface. A tail load request is triggered every time the user scrolls, with some caveats.

A prefetch and a head load can both be in flight at once. We will vend content for whichever one returns first. Or if they both take too long, we will vend cache content.

A tail load will be attempted every time the user scrolls, though we will issue a request only if the in-memory pool has three or fewer video stories from the network. So, if we have four stories from the network, we won’t issue the request. If we have four stories from the cache, we will issue the network request. Alongside the tail-load request, we will send user signals to ranking to help generate good video candidates for that request.

Server receives request

When a client request arrives at the server, it passes through several key layers. Since it’s a GraphQL request, the first of these layers is the GraphQL framework and our GraphQL schema definition of the video data model. Next is the video-delivery stack, which is a generalized architecture capable of serving video for any product experience in Facebook. This architecture has flexibility in supporting various backing-data sources: feeds, databases such as TAO, caches, or other backend systems. So, whether you’re looking at a profile’s video tab, browsing a list of videos that use a certain soundtrack or hashtag, or visiting the new Video Tab, the video-delivery stack serves all of these.

For the Video Tab, the next step is the Feed stack. At Facebook, we have lots of feeds, so we’ve built a common infrastructure to build, define, and configure various types of feeds: the main News Feed, Marketplace, Groups—you name it. The Video Tab’s feed implementation then calls into the ranking backend service.

Across these layers, the server handles a significant amount of business logic. This includes throttling requests in response to tight data-center capacity or disaster-recovery scenarios, caching results from the ranking backend, gathering model-input data from various data stores to send to ranking, and latency tracing to log the performance of our system, as well as piping through client-input parameters that need to be passed to ranking.

Ranking receives request

Essentially our ranking stack is a graph-based execution service that orchestrates the entire serving workflow to eventually generate a number of video stories and return them to the web server.

The recommendation-serving workflow typically includes multiple stages such as candidate retrieval from multiple types of retrieval ML models, various filtering, point-wise ranking, list-wise ranking, and heuristic-diversity control. A candidate will have to go through all these stages to survive and be delivered.

Besides, our ranking stack also provides more advanced features to maximize users’ values. For example, we use root video to contextualize the top video stories to achieve a pleasant user experience. We also have a framework called Elastic Ranking that allows dynamic variants of the ranking queries to run based on system load and capacity availability.

Server receives response from ranking

At the most basic level, ranking gives the server a list of video IDs and ranking metadata for each one. The server then needs to load the video entities from our TAO database and execute privacy checks to ensure that the viewer is able to see these videos. The logic of these privacy checks is defined on the server, so ranking can’t execute these privacy checks, although ranking has some heuristics to reduce the prevalence of recommending videos that the viewer won’t be able to see anyway. Then the video is passed back to the GraphQL framework, which materializes the fields that the client’s query originally asked for. These two steps, privacy checking and materialization, together and in aggregate constitute a meaningful portion of global CPU usage in our data centers, so optimizing here is a significant focus area to alleviate our data-center demand and power consumption.

Mobile receives response from server

When the client receives the network response, the video stories are added to the in-memory pool. We prioritize the stories based on the server sort key, which is provided by the ranking layer, as well as on whether or not it has been viewed by the user. In this way, we defer content prioritization to the ranking layer, which has much more complex mechanisms for content recommendation compared to the client.

By deferring to the server sort key on the client, we accomplish our key principles of deferring to ranking for content prioritization as well as prioritizing fresh content.

The balance for mobile clients is between content freshness and performance/efficiency. If we wait too long for the network request to complete, the user will leave the surface. If we instantly vend cache every time the user comes to the surface, the first pieces of content the user sees may not be relevant or interesting, since they are stale.

If we fetch too often, then our capacity costs will increase. If we don’t fetch enough, relevant network content won’t be available and we will serve stale content.

When stories are added from the in-memory pool, we also perform media prefetching; this ensures that swiping from one video to another is a seamless experience.

This is the constant balancing act we have to play on behalf of our mobile clients in the content-delivery space.

New video-feed delivery features dynamic pagination

In a typical delivery scenario, all users receive a page of videos with a fixed size from ranking to client. However, this approach can be limiting for a large user base where we need to optimize capacity costs on demand. User characteristics vary widely, ranging from those who never swipe down on a video to those who consume many videos in a single session. The user could be completely new to our video experience or someone who visits the FB app multiple times a day. To accommodate both ends of the user-consumption spectrum, we developed a new and dynamic pagination framework.

Under this approach, the ranking layer has full control over the video page size that should be ranked for a given user and served. The server’s role is to provide a guardrail for deterministic page-size contracts between the server and client device. In summary, the contract between ranking and server is dynamic page size, while the contract between server and client is fixed page size, with the smallest possible value. This setup helps ensure that if the quantity of ranked videos is too large, the user device doesn’t end up receiving all of them. At the same time, it simplifies client-delivery infrastructure by ensuring there is deterministic page-size behavior between the client and server.

With the above setup, ranking can provide user personalization to varying degrees. If ranking is confident in its understanding of a user’s consumption needs, it can output a larger set of ranked content. Conversely, if ranking is less confident, it can output a smaller set of ranked content. By incorporating this level of personalization, we can carefully curate content for users who are relatively new to the platform while providing a larger recommendation batch for regular users. This approach allows us to conserve capacity and serve the best content to our extremely large user base.

Real-time ranking

Real-time ranking adjusts video-content ranking based on user interactions and engagement signals, delivering more relevant content as users interact with the platform.

Collecting real-time signals such as video view time, likes, and other interactions using asynchronous data pipelines enables accurate ranking, or it enables using synchronous data pipelines such as piggybacking a batch of these signals into the next tail-load request. This process relies on system latency and signal completeness. It’s important to note that if the snapshot of real-time signals between two distinct ranking requests is similar, there is little to no adjustment that ranking can perform to react to the user’s current interest.

Ranking videos in real time ensures prominent display of relevant and engaging content, while eliminating duplicates for content diversification as well as new topic exploration. This approach enhances user engagement by providing a personalized and responsive viewing experience, adapting to user preferences and behaviors in real time during app sessions.

Glossary of Terms

- Network request types

- Prefetch

- A request issued before the surface is visible. Prefetch is available only for our tab surface.

- Head load

- The initial network request. It has no pagination token associated with it.

- Tail load

- Includes all the subsequent requests. Tail load has a pagination token and also user signals captured during the user session sent in it.

- Prefetch

- Client mechanisms

- Cache

- The disk cache we write unseen videos to for future viewing sessions.

- In-memory pool

- The memory store for video stories that are ready for user viewing. These videos can be from our cache or from network requests.

- Vending/Vend

- Moving a video story from the pool to the UI layer.

- UI bottom buffer

- When we vend, we actually vend multiple stories to the UI layer, so we have one video ready for the viewer to watch and two stories that are being prepared to be viewed below the visible video.

- Cache

- Performance and efficiency measurements

- Latency

- The amount of delay a request encounters when going through our end-to-end delivery system. Total latency is calculated by adding together all the time delays through our system.

- Initial load time

- The time it takes for video playback to begin once a user touches the user interface to navigate to the surface.

- Tail-load time

- The period of time a user-loading UI is shown during a tail load request.

- Capacity

- Physical infrastructure required to fulfill the network request. The network request has some dollar cost associated with it when done at scale.

- Latency

- End-to-end delivery

- Freshness

- We classify freshness as how long ago ranking generated this video candidate. We generally consider fresher content to be better, since it operates on more recent and relevant signals. At times, content age is also a helpful measure to understand when a video was created by the creator.

- Responsiveness

- This term encompasses how quickly and regularly we deliver fresh content to the user, from the network request issued by the mobile client to when the client vends the video in the UI for the user to see. Lots of things can present challenges here. Think of responsiveness as how well and consistently our end-to-end infrastructure delivers fresh content.

- Repetitiveness

- How often do we show the same video to the user? This helps us ensure the user experience is as unique and engaging as possible and allows the system to explore new topics for given users.

- Freshness