- WATCH NOW

- 2025 EVENTS

- PAST EVENTS

- 2024

- 2023

- 2022

- February

- RTC @Scale 2022

- March

- Systems @Scale Spring 2022

- April

- Product @Scale Spring 2022

- May

- Data @Scale Spring 2022

- June

- Systems @Scale Summer 2022

- Networking @Scale Summer 2022

- August

- Reliability @Scale Summer 2022

- September

- AI @Scale 2022

- November

- Networking @Scale Fall 2022

- Video @Scale Fall 2022

- December

- Systems @Scale Winter 2022

- 2021

- 2020

- 2019

- 2018

- 2017

- 2016

- 2015

- Blog & Video Archive

- Speaker Submissions

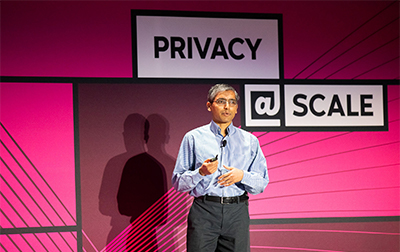

The @Scale Conference 2019

OCTOBER 16, 2019 @ 8:00 AM PDT - 6:00 PM PDT

The annual @Scale conference is an invite-only, in-person conference hosted in the fall of each year in the Bay Area, California. The event invites engineers and industry leaders from all over the world to come together and discuss leading @Scale topics and network with their peers. Attendees will be able to participate in several thought provoking general sessions and 4 separate tracks highlighting the year's themes.

ABOUT EVENT

The annual @Scale conference is today at the San Jose Convention Center!

This year’s event features technical deep dives from engineers at a multitude of scale companies. Amazon Web Services, Box, Confluent, Cloudflare, Facebook, Google, Lyft, and NVIDIA are scheduled to appear. Attendees will be able to participate in four tracks: AI, Data Infra, Privacy and Security. We also have our scale system demonstration area back for a fourth year so engineers can interact with the latest tech.

October 16

SPEAKERS AND MODERATORS

Sambavi Muthukrishnan

Facebook

Tamar Bercovici

Box

Ashraf Karim

Paypal

Srinivas Narayanan leads Applied AI Research at Facebook doing research and development in a... read more

Srinivas Narayanan

Facebook

Lea is the Chief Privacy Officer of Humu. She works to build respect for... read more

Lea Kissner

Humu

Ruoming Pang

Google

Ashesh Jain

Lyft

Krishnaram Kenthapadi

LinkedIn

Shannon Zhu

Facebook

Neha Deodhar

YugaByte

Vinaya Polamreddi

Facebook

Subodh Iyengar

Facebook

Samuel Rhea

Cloudflare

Evan Johnson

Cloudflare

Miguel Azevedo

WhatsApp

Jones Udo-akang

Facebook

Jana van Greunen

Facebook

Sharan Chetlur

NVIDIA

Nick Sullivan

Cloudflare

Mingtao Yang

Facebook

Ajanthan Asogamoorthy

Facebook

Akshat Vig is a Principal Engineer in Amazon DynamoDB (Amazon Web Services). He has... read more

Akshat Vig

Amazon Web Services

Romer Rosales is a Sr. Director of Artificial Intelligence (AI) at LinkedIn. His team... read more

Romer Rosales

LinkedIn

Jessie ‘Chuy’ Chavez is a Senior Software Engineer and Manager of the Data Liberation... read more

Jessie Chavez

Google

William is a Software Engineer at Facebook where he is a member of the... read more

William Morland

Facebook

Hongyi Hu is an engineer manager at Dropbox, where he leads the Application Security... read more

Hongyi Hu

Dropbox

Ganesh Srinivasan is vice president of engineering at Confluent, provider of an event streaming... read more

Ganesh Srinivasan

Confluent

Joe is the Product Lead at Facebook for PyTorch with a focus on building... read more

Joe Spisak

Facebook

Anthony is a Data Engineer on the Firefox Data Platform. read more

Anthony Miyaguchi

Mozilla Corporation

Bartley Richardson is a Senior Data Scientist and AI Infrastructure Manager (RAPIDS) at NVIDIA.... read more

Bartley Richardson

NVIDIA

Sebastian is an engineering manager at Facebook. He has been at Facebook for over... read more

Sebastian Wong

Facebook

Karthik Raghunathan is the Head of Machine Learning of the Webex Intelligence Group at Cisco.... read more

Karthik Raghunathan

Cisco Systems

Vladimir Fedorov

Facebook

Shamiq is currently Directory of Security at Coinbase, where he tends after Application Security,... read more

Shamiq Islam

Coinbase

EVENT AGENDA

Event times below are displayed in PT.

October 16

October 16

08:00 AM

-

09:00 AM

Registration and Breakfast

09:00 AM

-

09:50 AM

Women's Leadership Breakfast

Speaker

Sambavi Muthukrishnan,Facebook

Speaker

Tamar Bercovici,Box

Speaker

Ashraf Karim,Paypal

10:00 AM

-

10:30 AM

KEYNOTE: AI - The Next Big Scaling Frontier

10:35 AM

-

11:05 AM

KEYNOTE: Engineering For Respect: Building Systems For The World We Live In

11:20 AM

-

11:50 AM

DATA INFRA Zanzibar: Google’s Consistent, Global Authorization System

Determining whether online users are authorized to access digital objects is central to preserving privacy. This talk presents the design, implementation, and deployment of Zanzibar, a global system for storing and evaluating access control lists. Zanzibar provides a uniform data model and configuration language for expressing a wide range of access control policies from hundreds of client services at Google, including Calendar, Cloud, Drive, Maps, Photos, and YouTube. Its authorization decisions respect causal ordering of user actions and thus provide external consistency amid changes to access control lists and object contents. Zanzibar scales to trillions of access control lists and millions of authorization requests per second to support services used by billions of people. It has maintained 95th-percentile latency of less than 10 milliseconds and availability of greater than 99.999%% over 3 years of production use.

Speaker

Ruoming Pang,Google

11:20 AM

-

11:50 AM

AI: Unique Challenges and Opportunities for Self-Supervised Learning in Autonomous Driving

Autonomous vehicles generate a lot of raw (unlabeled) data every minute. However, only a small fraction of that data can we labeled manually. My talk will focus on how we leverage the unlabeled data for the tasks for perception and prediction in a self-supervised manner. There are few unique ways to achieve this in the AV land: one of them is cross-modal self-supervised learning, where one modality can serve as a learning signal for another modality without the need for labeling (such as using depth from LiDAR to train monocular depth neural network on images). Another approach that is unique to AVs, is by using the outputs from large scale optimization (that can only run in non-real-time in the cloud such as SLAM) as a learning signal to train neural networks that mimick their outputs but can run in real-time on the AV. The talk will also touch upon how we can leverage the Lyft fleet to oversample the long tail events and hence learn the long tail.

Speaker

Ashesh Jain,Lyft

11:20 AM

-

11:50 AM

PRIVACY: Fairness and Privacy in AI/ML Systems

How do we protect privacy of users when building large-scale AI based systems? How do we develop machine learned models and systems taking fairness, accountability, and transparency into account? With the ongoing explosive growth of AI/ML models and systems, these are some of the ethical, legal, and technical challenges encountered by researchers and practitioners alike. In this talk, we will first motivate the need for adopting a fairness and privacy by design" approach when developing AI/ML models and systems for different consumer and enterprise applications. We will then focus on the application of fairness-aware machine learning and privacy-preserving data mining techniques in practice by presenting case studies spanning different LinkedIn applications (such as fairness-aware talent search ranking, privacy-preserving analytics and LinkedIn Salary privacy & security design).

Speaker

Krishnaram Kenthapadi,LinkedIn

11:20 AM

-

11:50 AM

SECURITY: Leveraging the Type System to Write Secure Applications

How to extend the type system to eliminate entire classes of security vulnerabilities at scale. Application security remains a long-term and high-stakes problem for most projects that interact with external users. Python's type system is already widely used for readability, refactoring, and bug detection — this talk will demonstrate how types can also be leveraged to make your project systematically more secure. We'll investigate (1) how static type checkers like Pyre or MyPy can be extended with simple library modifications to catch vulnerable patterns, and (2) how deeper type-based static analysis can reliably flag remaining use cases to security engineers. As an example, I'll focus on a basic security problem and how you might use both tools in combination, drawing from our experience deploying these methods to build more secure applications at Facebook and Instagram.

Speaker

Shannon Zhu,Facebook

12:00 PM

-

12:30 PM

DATA INFRA: 6 Technical Challenges Developing a Distributed SQL Database

Developing YugaByte DB was but not without its fair share of technical challenges. There were times when we had to go back to the drawing board and even sift through academic research to find a better solution than what we had at hand. In this talk we’ll outline some of the hardest architectural issues we have had to address in our journey of building an open source, cloud native, high-performance distributed SQL database. Topics include architecture, SQL compatibility, distributed transactions, consensus algorithms, atomic clocks and PostgreSQL code reuse.

Speaker

Neha Deodhar,YugaByte

12:00 PM

-

12:30 PM

AI: Reading Text from Visual Content @Scale

There are billions of images and videos posted on Facebook every day, and a significant percentage of them contain text. It is crucial to understand the text within visual content to provide people with better Facebook product experiences and remove harmful content. Traditional optical character recognition systems are not effective on the huge diversity of text in different languages, shapes, fonts, sizes and styles. In addition to the complexity of understanding the text, scaling the system to run high volumes of production traffic efficiently and in real time creates another set of engineering challenges. Attendees of this talk will learn about multiple innovations across modeling, training infrastructure, deployment infrastructure and efficiency measures we made to build our state-of-the-art OCR system running at Facebook scale.

Speaker

Vinaya Polamreddi,Facebook

12:00 PM

-

12:30 PM

PRIVACY: Fighting Fraud Anonymously

Logging is essential to running a service or an app. However, every app faces a dilemma: the more data is logged, the more we understand the problems of users, but the less privacy they have. One way to add privacy is to report events without identifying users, however this can allow fraudulent logs to be reported. In this talk I’ll introduce the problem of fraudulent reporting and our approach to using cryptography, including blind signatures, to enable fraud resistant anonymous reporting. I’ll talk about a specific application to ads and how a fraud resistant private reporting is feasible, different approaches, and also discuss some open problems.

Speaker

Subodh Iyengar,Facebook

12:00 PM

-

12:30 PM

SECURITY: Securing SSH Traffic to 190+ Data Centers

Cloudflare maintains thousands of servers in over 190 points of presence that need to be accessed from multiple offices. We relied on a private network and SSH keys to securely connect to those machines. However, that private network perimeter posed a risk if breached and those keys had to be carefully managed and revoked as needed. To solve those challenges, we built and migrated to a model where we expose those servers to the public internet and authenticate with an identity provider to reach those servers. We deployed a system that leverages ephemeral certificates, based on user identity, so that we could delete our SSH keys as an organization. We are here to share what we learned in the three years that Cloudflare has been building a zero-trust layer on top of its existing network to secure both HTTP and non-HTTP traffic.

Speaker

Samuel Rhea,Cloudflare

Speaker

Evan Johnson,Cloudflare

12:30 AM

-

02:00 PM

Lunch & Office Hours

12:45 PM

-

01:45 PM

Student Program

Speaker

Miguel Azevedo,WhatsApp

Speaker

,

Speaker

Jones Udo-akang,Facebook

02:00 PM

-

02:30 PM

DATA INFRA: Architecture of a Highly Available Sequential Data Platform

Logdevice is a unified, high-throughput, low-latency platform for handling a variety of data streaming and logging needs. Specifically this talk with be diving into the architectural details and variants of Paxos used in Logdevice to provide scalability, availability and data management. These provide essential flexibility to simplify the applications build on top of the system.

Speaker

Jana van Greunen,Facebook

02:00 PM

-

02:30 PM

AI: Multi-Node Natural Language Understanding at Scale

This session includes a deep look into the world of multi-node training for complex NLU models like BERT. Sharan will describe the challenges of tuning for speed and accuracy at scales needed to bring training times down from weeks to minutes. Drawing from real world experience running models on up to ~1500 GPUs with reduced precision techniques, he will dive into the impact of different optimizers, strategies to reduce communication time, and improvements to per-GPU performance.

Speaker

Sharan Chetlur,NVIDIA

02:00 PM

-

02:30 PM

PRIVACY: DNS Privacy at Scale: Lessons and Challenges

It’s no secret that the use of the domain name system (DNS) reveals a lot of information about what people do online. The use of traditional unencrypted DNS protocols reveals this information to third parties on the network, introducing privacy risks to users as well as enabling country-level censorship. In recent years, Internet protocol designers have sought to retrofit DNS with several new privacy mechanisms to help provide confidentiality to DNS queries. The results of this work include technologies such as DNS-over-TLS, DNS-over-HTTPS and encrypted SNI for TLS. In this talk, we’ll share some of the technical and political challenges around deploying these technologies.

Speaker

Nick Sullivan,Cloudflare

02:00 PM

-

02:30 PM

SECURITY: Enforcing Encryption @Scale

Facebook runs a global infrastructure that supports thousands of services, with many new ones spinning up daily. Protecting network traffic is taken very seriously, and engineers must have a sustainable way to enforce security policies transparently and globally. One requirement is that all traffic that crosses “unsafe” network links must be encrypted with TLS 1.2 or above using secure modern ciphers and robust key management. Mingtao and Ajanthan describe the infrastructure they built for enforcing the “encrypt all’ policy on the end hosts, as well as alternatives and trade-offs encompassing how they use BPF programs. Additionally, they discuss Transparent TLS (TTLS), a solution that they’ve built for services that could not enable TLS natively or could not easily upgrade to a newer version of TLS.

Speaker

Mingtao Yang,Facebook

Speaker

Ajanthan Asogamoorthy,Facebook

02:35 PM

-

03:05 PM

DATA INFRA: Amazon DynamoDB: Fast and flexible NoSQL database service for any scale

Amazon DynamoDB is a hyperscale, NoSQL database designed for internet-scale applications, such as serverless web apps, mobile backends, and microservices. DynamoDB provides developers with the security, availability, durability, performance, and manageability they need to run mission-critical workloads at extreme scale. In this session, we dive deep into the underpinnings of DynamoDB and how we run a fully managed, nonrelational database service that is used by more than 100,000 customers. We look under the hood of DynamoDB and discuss how features such as DynamoDB Streams, ACID transactions, continuous backups, point-in-time recovery (PITR), and global tables work @scale. We also share some of our key learnings in building a highly durable, highly scalable, and highly available key-value store that you can apply when building your large-scale systems.

Speaker

Akshat Vig,Amazon Web Services

02:35 PM

-

03:05 PM

AI: Cross Product Optimization

Artificial Intelligence (AI) is behind practically every product experience at LinkedIn. From ranking the member’s feed to recommending new jobs, AI is used to fulfill our mission to connect the world’s professionals to make them more productive and successful. While product functionality can be decomposed into separate components, they are beautifully interconnected; thus, creating interesting questions and challenging AI problems that need to be solved in a sound and practical manner. In this talk, I will provide an overview of lessons learned and approaches we have developed to address these problems, including scaling to large problem sizes, handling multiple conflicting objective functions, efficient model tuning, and our progress toward using AI to optimize the LinkedIn product ecosystem more holistically.

Speaker

Romer Rosales,LinkedIn

02:35 PM

-

03:05 PM

PRIVACY: Data Transfer Project - Expanding Data Portability at Scale

The Data Transfer Project was launched in 2018 to create an open-source, service-to-service data portability platform so that all individuals across the web could easily move their data between online service providers whenever they want. This talk will give a technical overview of the architecture as well as several components developed to support the Data Transfer Project’s ecosystem including common data models, the use of industry standards, the adapter framework, and more. We will walk through a core framework developer setup as well as how to integrate with the project and provide users the ability to port data into and out of your services.

Speaker

Jessie Chavez,Google

Speaker

William Morland,Facebook

02:35 PM

-

03:05 PM

SECURITY: The Call is Coming From Inside the House: Lessons in Securing Internal Apps

Locking down internal apps presents unique and frustrating challenges for appsec teams. Your organization may have dozens if not hundreds of sensitive internal tools, dashboards, control panels, etc., running on heterogenous technical stacks with varying levels of code quality, technical debt, external dependencies, and maintenance commitments. How do you tackle this problem scalably with limited resources? Come hear a dramatic and humorous tale of internal appsec and the technical and management lessons we learned along the way. Even if your focus is on securing external apps, this talk will be relevant for you. You’ll hear about what worked well for us and what didn’t, including: - Finding a useful mental model to organize your roadmap - Starting with the basics: authn/z, TLS, etc. - Rolling out Content Security Policy - Using SameSite cookies as a powerful entry point regulation mechanism - Leveraging WAFs for useful detection and response - Using internal apps as a training ground for new security engineers

Speaker

Hongyi Hu,Dropbox

03:05 PM

-

03:25 PM

Office Hours

03:25 PM

-

03:55 PM

DATA INFRA: Kafka @Scale: Confluent’s Journey Bringing Event Streaming to the Cloud

As streaming platforms become central to data strategies, companies both small and large are re-thinking their architecture with real-time context at the forefront. What was once a ‘batch’ mindset is quickly being replaced with stream processing as the demands of the business impose more and more real-time requirements on developers and architects. What started at companies like LinkedIn, Facebook, Uber, Netflix and Yelp has made its way to countless others in a variety of sectors. Today, thousands of companies across the globe build their businesses on top of Apache Kafka®. This talk will be a deep dive into the evolution and future of event streaming and the lessons that Confluent learned through its journey to make the platform cloud-native.

Speaker

Ganesh Srinivasan,Confluent

03:25 PM

-

03:55 PM

AI: Pushing the State of the Art in AI with PyTorch

In this session we will deep dive into how PyTorch is being used to help accelerate the path from novel research to large-scale production deployment in computer vision, natural language processing, and machine translation at Facebook. We’ll also explore the latest product updates, libraries built on top of PyTorch and new resources for getting started.

Speaker

Joe Spisak,Facebook

03:25 PM

-

03:55 PM

PRIVACY: Firefox Origin Telemetry with Prio

Measuring browsing behavior by site origin can provide actionable insights into the broader web ecosystem in areas such as blocklist efficacy and web compatibility. However, an individual’s browsing history contains deeply personal information that browser vendors should not collect wholesale. In this talk, we discuss how we can precisely measure aggregate page-level statistics using Prio, a privacy-preserving data collection system developed by Stanford researchers and deployed in Firefox. In Prio, a small set of servers verify and aggregate data through the exchange of encrypted shares. As long as one server is honest, there is no way to recover individual data points. We will explore the challenges faced when implementing Prio, both in Firefox and its Data Platform. We will touch on how we have validated our deployment of Prio through two experiments: one which collects known Telemetry data and one which collects new data on the application of Firefox’s blocklists across the web. We will share the results of these experiments and discuss how they’ve informed our future plans.

Speaker

Anthony Miyaguchi,Mozilla Corporation

03:25 PM

-

03:55 PM

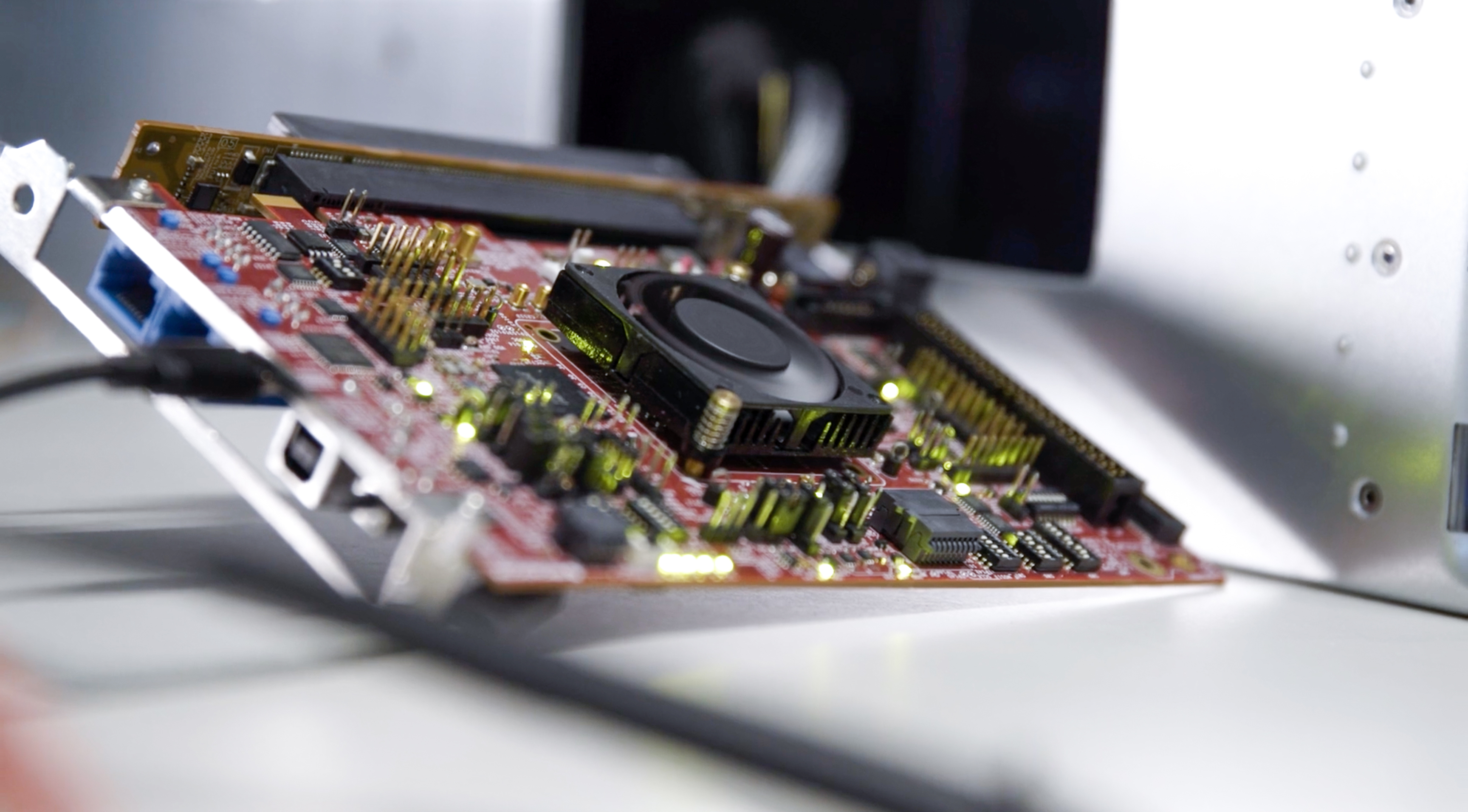

SECURITY: Streaming, Flexible Log Parsing with Real-Time Applications

Logs from cybersecurity appliances are numerous, are generated from heterogeneous sources, and are frequently victim to poor hygiene and malformed content. Relying on an already understaffed human workforce to constantly write new parsers, triage incorrectly parsed data, and keep up with ever-increasing data volumes is bound to fail. Using RAPIDS, an open-source data science platform, we show how creating a more flexible, neural network approach to log parsing can overcome these obstacles. Presented is an end-to-end workflow that begins with raw logs, applies flexible parsing, and then applies stream analytics (e.g., rolling z-score for anomaly detection) to the near real-time parsing. By keeping the entire workflow on GPUs (either on-premises or in a cloud environment), we demonstrate near real-time parsing and the ability to scale to large volumes of incoming logs.

Speaker

Bartley Richardson,NVIDIA

04:30 PM

-

05:00 PM

DATA INFRA: Disaggregated Graph Database with Rich Read Semantics

Access to the social graph is a mission critical workload for Facebook. Supporting a graph data model is inherently difficult because the underlying system has to be capable of efficiently supporting the combinatoric explosion of possible access patterns that can arise from even a single traversal. In this session, we will discuss the introduction of an ecosystem of disaggregated secondary indexing micro-services which are kept up to date and consistent with the source of truth via access to a shared log of updates into our serving stack. This work has enabled Facebook to efficiently accommodate access pattern diversity by transparently optimizing graph accesses under the hood. It is responsible for an over 50% reduction in read queries to the source of truth and has unlocked the ability for product developers to access the graph in new game changing ways. We will also discuss the logical next steps that we are exploring as a consequence of this work.

Speaker

Sebastian Wong,Facebook

04:30 PM

-

05:00 PM

AI: Natural Language Processing for Production-Level Conversational Interfaces

Conversational applications often are over-hyped and underperform. While there's been significant progress in Natural Language Understanding (NLU) in academia and a huge growing market for conversational technologies, NLU performance significantly drops when you introduce language with typos or other errors, uncommon vocabulary, and more complex requests. This talk will cover how to build a production-quality conversational app that performs well in a real-world setting. We will cover the domain-intent-entity classification hierarchy that has become an industry standard, and describe our extensions to this standard architecture such as entity resolution and shallow semantic parsing that further improve system performance for non-trivial use cases. We demonstrate an end-to-end approach for consistently building conversational interfaces with production-level accuracies that has proven to work well for several applications across diverse verticals.

Speaker

Karthik Raghunathan,Cisco Systems

04:30 PM

-

05:00 PM

PRIVACY: Fireside Chat

Speaker

Lea Kissner,Humu

Speaker

Vladimir Fedorov,Facebook

04:30 PM

-

05:00 PM

SECURITY: Automated Detection of Blockchain Network Events

Ever wondered what goes on behind the scenes to keep user assets safe in the notoriously dangerous field of Cryptocurrency custodianship? Turns out you can model cryptocurrency protocols after existing communications networks, then build tooling to monitor and respond to threats as they emerge. We’ll start with examples of threats we’re deeply concerned about, then talk through how we detect those threats. If you’re thinking about building similar tools, you’ll want to stick around and hear the challenges we faced extending our tools from one protocol and heuristic to many protocols and heuristics.

Speaker

Shamiq Islam,Coinbase

05:00 PM

-

06:00 PM

Social Hour & Office Hours