Introduction

Back in 2018, the Alliance for Open Media (AOMedia) standardized the first codec, the AV1 video codec. Since then, development and implementations of that codec have progressed, and recently companies including YouTube, Netflix, and Meta have deployed it at large scale for video streaming. At Meta, we believe that now is the time to start adopting AV1 for real-time communications (RTC) to deliver higher-quality calling. Multiple implementations are now available, and this year we are seeing commercial, open-source, and hardware-offload support all starting to ship in Apple, MediaTek, and Qualcomm chipsets.

Yet, adopting AV1 is a major step and challenge. The previous codec generation, HEVC/H.265, never saw much adoption in real-time use cases, mainly due to its complex licensing situation. Codec licensing is one of the bigger challenges for real-time communication, since it requires both encoder and decoder support running on end-user devices in large numbers. Most of the time, calling services are free for consumers, which makes it harder to motivate them to purchase an expensive IP license. With open-source implementations available, AOMedia’s goal to create license-free technology has become much easier. This means, however, that most players will be upgrading from the codec generation before, H.264, a 20-year-old codec specification—a big leap. This blog post will cover the challenges involved in making that leap and discuss what we at Meta are doing to address them.

Advantages of adopting AV1 on RTC

The biggest reason to move to a more advanced video codec is simple: The same quality experience can be delivered with a much lower bitrate, and for real-time calling we can deliver a much higher-quality experience for our users who are on bandwidth-constrained networks. Measuring video quality is a complex topic, but a relatively simple way to look at it is to use the BD-BR (Bjontegaard Delta-Bit Rate) metric. BD-BR compares how much bitrate various codecs need to produce a certain quality level. By generating multiple samples at different bitrates, measuring the quality of the produced video provides a rate-distortion (RD) curve, and from the RD curve you can derive the BD-BR, as shown below:

When we compare our existing H.264-based solution with AV1, we see a BD-BR savings at around 30%, which is a major saving. This 30% savings is generated using normal video-calling content such as a talking head. Looking at it more intuitively, simply compare the quality of experiences among different codecs. We enabled AV1 in the Messenger App, and in the video examples below, you can view video calls on two iPhones side-by-side: AV1 on the left, and 264 on the right. Bitrate is restricted to 100 kbps. You can see the 264 video is blurry, and the AV1 is clearer. This is a good example of the benefit of adopting AV1.

Screen-Content Coding Tools

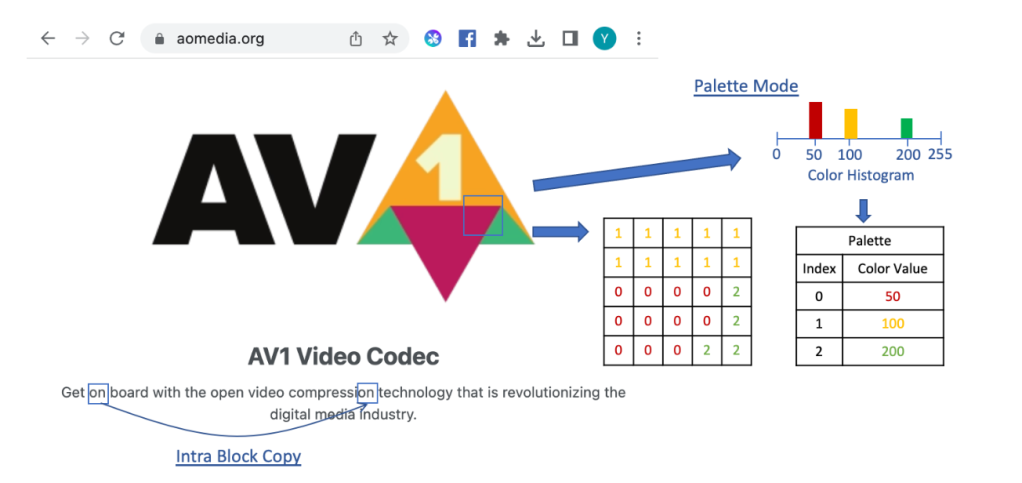

With screen content becoming an increasingly important factor for Meta, use cases such as game streaming and VR remote desktop require high-quality encoding, and in these areas AV1 truly shines. Traditionally, video encoders aren’t that well suited to complex content such as text with a lot of high-frequency content, and we’re very sensitive to reading blurry text. AV1 has a set of coding tools—palette mode and intra-block copy—that drastically improve performance for screen content. Palette mode is designed according to the observation that the pixel values in a screen-content frame usually concentrate on the limited number of color values. Palette mode can represent the screen content efficiently by signaling the color clusters instead of the quantized transform-domain coefficients. In addition, for typical screen content, repetitive patterns can usually be found within the same picture. Intra-block copy facilitates block prediction within the same frame, so that the compression efficiency can be improved significantly. The fact that AV1 provides these two tools at the baseline profile is a huge plus.

Thanks to more advanced coding tools, we see up to an 80% BD-BR savings, which is truly massive: We’re providing the same quality for one-fifth of the total bitrate.

The difference is also clear in a visual comparison. The top sample shows H264, and AV1 is at the bottom:

RPR: Eliminating Key Frames

Another very useful feature is called Reference Picture Resampling (RPR), which allows resolution changes without generating a key frame. In video compression, a key frame is one that’s encoded independently, like a still image. It’s the only type of frame that can be decoded without having another frame as reference. Key frames are larger in size and can use up the bitrate of several subsequent frames, causing video freezes or quality drops. In our current calling platform using H.264, we generate 1.5 key frames per minute just for resolution switching due to network changes. By using RPR, we can avoid generating any key frames.

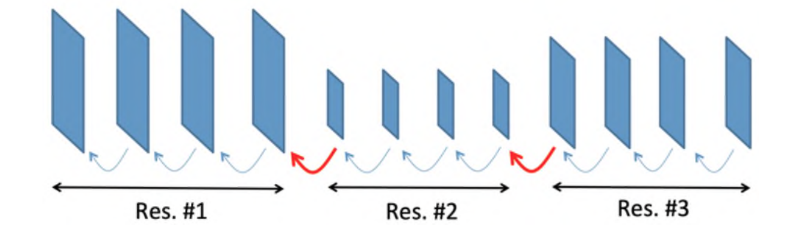

Referencing lower-resolution frames also unlocks full scalability support, where you can generate multiple quality layers, each adding a higher-quality experience:

- Temporal scalability: increasing frame rate with added layer

- Spatial scalability: increased resolution with added layer

- Quality scalability: reduced coding artifacts with added layer

This feature is used in group calling, where each client can send as many layers as possible to a central server (SFU), which then passes on a selection of layers to the receivers based on their available bandwidth and other factors such as rendered resolution (for example, higher quality for the main feed).

Challenges and Solutions

All of these helpful features and quality wins don’t come for free, especially with the major challenge of upgrading to a 20-year-newer codec. I’ll go over the biggest challenges and how we’re working at Meta to mitigate those factors.

Binary Size

Using the open-source libAOM as example, AV1 support will add 1.7 MB to your application, or 600 kB when compressed for distribution. This might not be a concern at all for a startup company trying to build a user base, but for a company serving billions of users, it’s a major challenge. Binary size affects update success rate, and an increase will leave more users on older clients. Also, application startup time is affected by binary size, and the amount of time for an incoming call is important for a calling application. Additionally, general software health metrics such as memory usage and crash rate are closely correlated with binary size. An increase in the order of 600 kB is a major increase, and could be equivalent to a whole year’s feature budget for a large organization.

In an attempt to mitigate this problem, we devised a couple of approaches. Since we were integrating a big library, our immediate solution was to use a dynamic-download framework to download AV1 as a separate feature component. Unfortunately, sometimes download failures occurred. These failures could have been due to bad network conditions or device issues, or they simply might have been random occurrences. Regardless of the cause, user experience would certainly have been affected. Hence, a dynamic-downloading approach could not be the answer to our problem.

Next, we considered implementing optimizations to reduce library-binary size as a solution. For example, quantization metric (QM) is a coding tool that takes up about 10% of the total library size of libaom. Optimization could reduce the size of QM by one-half. This strategy could be extended to create an end-to-end pipeline optimization, ultimately removing unused tools from the library. For instance, if QM is not needed, we could actually remove it completely to free up 60k of binary space. We also have some flexibility at the application level. Besides RTC, the Messenger App has other features such as transcoding on video messages. Some of these features could be made to share the same library, reducing binary size. Likewise, we could avoid adding a codec library in the application by using built-in codec support.

CPU Usage and Memory Usage

While binary size might not be an issue for all applications, CPU usage certainly is. Media compression is always a trade-off between quality, bitrate, and processing. Even when used in low-complexity mode and real-time setting, AV1 is still more CPU-intensive by approximately a factor of five than the H.264 implementation we’re currently using. Although the performance is high enough to encode/decode on a single mobile CPU core, this can still create a problem, since it increases the power consumption on the device. For Meta calling, talk time is the number one top-level metric we track, and we won’t ship features that decrease talk time. We know there’s a direct link between battery usage and talk time. Increase battery usage by 1%, and talk time will drop by almost the same amount. Luckily, increased quality will also increase talk time, but we need to strike the right balance.

First, we need to establish what the real power cost is. Luckily, a five-times CPU increase for encoding doesn’t translate to a five-times increase in power use—that would be impossible to overcome. The biggest power draw on a phone comes from the display, and even if the SoC (system on a chip) comes in at second place, it’s also being used for many other operations and applications. To establish the real cost, we have measured the actual power usage of a test cell phone by bypassing the battery and hooking it up to a power meter. That way we can do a true comparison during a real call using both AV1 and H.264. On our test device, a Pixel 7, we see an increase of 0.11w when switching to AV1 encoding for a typical call, which amounts to an increase of approximately 4%, depending on other factors such as screen brightness.

Even if this increase is much smaller, it’s not small enough to ship AV1 for all clients across the board. We need to make sure we maximize the benefit while minimizing the negative impact.

The first task was to identify a list of devices capable of using AV1, such that we minimize the chance of video freeze or device overheating when AV1 is enabled on an incompatible device.

Since there are not many iPhone models, compiling a list of eligible iPhone models was relatively easy. For Android devices, it was a different story—there are simply too many legacy Android devices. Selection was made based on memory, the year of release, and even the Android OS versions, but none of these filters proved to be reliable. In the end, we had to use Meta’s in-house, device-benchmarking system to come up with a reliable list of compatible Android devices.

Next, we proceeded to deal with the out-of-memory crashes observed in A/B tests. Our initial suspicion was that a memory leak existed in the AV1 library. Subsequent investigations did not support this hypothesis. We suspected that apps running in the background might have been causing the leak, as high memory usage would increase the chance of memory running out and triggering crashes.

Memory usage is highly correlated with video resolution. To mitigate crash regression, we decided to create a dynamic codec switch—enabling AV1 initially for low- and medium-resolution settings and falling back to H264 for high resolution. In fact, hardware codec is generally preferred on higher-resolution settings to reduce power consumption. Therefore, before AV1 hardware support becomes available, it may be a good idea to avoid using AV1 on high resolution on mobile devices.

Despite our effort to select a list of all high-end Android phones compatible with AV1, a lot of factors still undermine the computing capabilities of these phones during a call. For example, device overheating could be caused by a background-running application that consumes a lot of CPU. In addition, a device would have to reduce CPU frequency in this case.

To better understand the aforementioned issue, we examined the encoding latency based on the data we collected on the Messenger App. This graph shows the AV1 distribution having a more prominent right skew compared to that of H264. This was expected. However, the latency of some devices was surprisingly close to the input frame rate, indicating that it was almost impossible for these devices to perform proper real-time encoding. As a matter of fact, these devices would likely overheat and become unable to encode AV1 video in real time, resulting in video freeze.

Our solution was to make our codec selection smarter. Resolution alone as a criterion was not enough. To determine if a device has enough computing power and whether a switch to 264 is needed, we have to include in our selection criteria several device-health measurements, such as encoding latency and device-battery level.

Owing to the improved codec-selection strategy, we were able to make AV1 available to mid-range Android devices. While some of these mid-range devices are not able to perform real-time encoding, they are capable of real-time decoding. We could implement an asymmetric codec design in this case. That is, mid-range devices would still encode and send 264 videos, but they could receive AV1 videos from high-end devices on the peer side. As a result, we successfully increased AV1 coverage on Android devices.

In-Product Measurements

Whenever we ship a feature, it’s always important to be able to measure the feature’s impacts. The measurement strategy has to reliably provide evidence to support the benefits of the feature. Moreover, it has to effectively monitor the feature’s long-term impacts.

While we needed an in-product quality measurement, a good solution had yet to be introduced to the industry, and there was not a reliable quality measurement in the RTC pipeline. The WebRTC reports the resolution and quantization parameter (QP). This is actually a quality approximate. Note that we are comparing two different codecs, AV1 versus 264. In general, each codec has not only its unique quantization definitions, but also its own in-loop filter. Therefore, when comparing two codecs under the same call condition, QP simply does not work.

We had to design our own quality measurement, one that is low in complexity so that it could cover all kinds of devices. In the field of video, PSNR (peak signal-to-noise ratio) is generally seen as a reliable metric. Although PSNR does not require a lot of computation, its implementation in RTC is still challenging. First and foremost, PSNR is a full-reference metric. In the RTC video pipeline, however, the sender side does not have a decoded video frame, and the receiver side does not have the source video. Therefore, computation of PSNR is not possible. In addition, before encoding a video, the video is scaled according to the network bandwidth by the sender, making PSNR computation even more challenging.

We proposed a framework for PSNR computation to circumvent the above issues. First, we modified the encoder to report distortions caused by compression. Next, we designed a lightweight scaling-distortion-estimation algorithm. We then computed a video PSNR according to the distortion values generated by the encoder and the algorithm. Thus, a reliable quality measurement of the impact of AV1 became possible.

Currently, AV1 is already being used on Meta’s Messenger App. Several advantages are apparent, including improved user feedback as reported by the A/B tests. We are extremely excited to deliver this technology leap to billions of users. We truly believe this is the right industry moment to adopt AV1 in RTC. In-product quality measurement is still a challenging issue in RTC products, and as we continue our endeavors, we look forward to industry collaborations to enhance AV1 call quality and improve user experience.