Additional Authors: Greg Dirrenberger and Udeepta Bordoloi

Users expect fast, seamless mobile-app experiences. A funnel analysis for some of our key product flows shows that a significant fraction of users will abandon their activity if a delay of a few seconds occurs. Performance and reliability are levers for engagement and brand perception. Apps such as Facebook have high technical complexity and massive scale, including billions of users and thousands of developers. So, the stakes are high for performance and reliability.

I support Meta’s Performance, Reliability, and Efficiency team, and we work on product quality. Our research has shown that performance and reliability are key to user experience. One of my proudest experiences is working on memory efficiency and delivering a fix that was featured at a company Q&A in 2024. That was an incredible moment for the team.

In this blog post, I will take you behind the scenes into our philosophy of putting the user at the center of our quality programs. I’ll show you why quality should be a business priority and how to methodically approach it.

Meta scale

First, let’s frame the scale of the Facebook app:

- Users: We have two billion daily-active users across the globe. They use Facebook to build connections with people and communities, explore content, and express themselves. Of course, they expect trustworthy and high-quality experience. Importantly, user expectations evolve as mobile devices, mobile apps, and networks improve.

- The app: Facebook has been on mobile platforms for more than 15 years. We have seen many product evolutions and dozens of product features. We ship thousands of changes server-side with multiple rollouts per day, and we ship thousands of changes in a weekly mobile-client release. This requires per-change validation, continuous integration, code and configuration rollout safety, and production operations.

- Developers: We have thousands of developers who work on different features and possess different ranges of experience and expertise. Every developer must be responsible for quality.

Product quality

At a high level, the areas of quality that correlate most to user engagement are:

- Performance: Performance consists of loading-time latency and responsiveness. Loading-time latency includes the time it takes from tapping the app icon to viewing content and navigating between screens; for example, navigating to the Marketplace screen from the Newsfeed home screen. Since Facebook is content driven, we also consider pagination loading, the spinning wheel when you reach the end of your feed. We have found that users’ tolerance for content-loading latency is only one to three seconds, depending on the product context. Responsiveness includes responses to touches and frame drops when scrolling.

- Stability: Stability reflects app availability in the foreground when you expect it. It is measured by application crashes.

- Correctness: Correctness reflects whether the functionality of the product matches user expectations.

We have additional quality domains that matter to users, such as application size, device health such as battery usage, and more. The above areas narrow our focus to quality domains that impact users most. Furthermore, our definition of product quality also depends on the specific product experience. For example, when watching videos, video-playback quality is highly relevant to that specific product experience.

Deep Dive: Stability

Stability is one of our critical quality domains, and we measure it by the frequency of foreground app crashes. This includes application-not-responding (ANRs) on Android and stalls on iOS. Crashes result in immediate, tangible user pain—for example, when users are blocked from even opening the app, what we call an “insta-crash.” Significantly, not all crashes are considered equal. For example, a crash while creating a post is more painful if you cannot recover the content. And users experience even more pain when they encounter repeated crashes that completely block their intended activity.

Then, there are long-term effects to consider. Our users remember crashes for several months. So crashes also have long-term impact on the perception of app quality and brand. Even when you fix a specific crash, users are left with a feeling of poor stability. Our analysis also shows that crashes churn users off the application. In other words, we can lose trust in buckets, and then must rebuild that trust drop by drop.

ANRs and stalls require additional focus, since they are frequently difficult to fix due to a lack of actionable diagnostics. For example, we added telemetry to the main-thread message queue. Then we need to make telemetry useful to product developers. We visualize thread execution and blockages such as disk I/O, CPU, waiting on other threads, priority inversion, and more. Examples of resolving ANRs include moving IPCs off the main thread, dispatching higher-priority broadcast receivers, and creating asynchronous service-initialization interfaces.

Memory issues have fewer or even zero diagnostics. On Android, we encounter Java memory leaks, native-memory leaks, and low-memory kills (LMKs). By the time a memory issue occurs, we have little or no relevant info regarding the cause. For example, the memory leak could have originated in the previous screen. So we built custom telemetry for object-allocation tracking. For sudden memory spikes, we can also use heap profiling to identify the memory leak. For gradual memory leaks, we track the object allocations over the life cycle of the process. Note that given the performance cost of diagnostics and privacy of data, this requires selective sampling.

Deep Dive: Correctness

Correctness is another critical quality domain. We need to ensure that the app behaves the way that users expect. One method of measuring functional correctness is through what we call a “RageShake.” While in a Meta mobile app, shake your phone to report a bug. Give it a try! Reporting a bug reflects high user intentionality and is a strong signal of user pain. To classify user bug reports, we use natural-language processing, parse the diagnostics, and apply labels that identify traits. We use machine-learning classification, which ultimately results in identifying tangible user symptoms that we need to fix. Examples of symptoms are “Can’t load news feed” and “No audio in Reels.”

Some bug reports require interpretation, so we distinguish between “objective” and “subjective” bugs. “Objective” bugs occur when a product feature is actually broken, such as when a user clicks the “Like” button to react to a post, but the reaction fails to register. Objective bugs represent feature regressions and are tangible signals that a fix is required. “Subjective” bugs occur when a user expects a different experience than the current design is capable of providing; for example, the user would prefer to have available a “Follow” button instead of a “Like” button, or the user cannot access a desired (but unavailable) feature, and they do not know why. “Subjective” bugs still reflect user pain, but their cause is more ambiguous and so require a deeper understanding for us to address them. Sometimes, subjective bugs result in new features or redesign.

We also aggregate “Voice of the Community” reports, which are deep dives into product experiences and cohorts of users. These serve as case studies to improve existing product experiences and build new ones. For example, Voice of the Community reports have prompted improvements in ranking quality, monetization for creators, Newsfeed loading, and a long tail of usability issues.

User bug reports can be imprecise and lack volume, so at times the signal can be challenging to interpret. The recommended approach is to instrument diagnostics for user flows, including success paths, failure paths, and funnel analysis. Telemetry and instrumented paths can more precisely identify the magnitude of a correctness issue and pinpoint the root cause quickly. For paths that are not well instrumented, user bug reports can then be used as a catch-all fallback mechanism.

Deep Dive: Video quality

Video quality is a product-specific issue. To put the scale of videos posted and viewed on Facebook into perspective, we serve billions of video hours watched per day and store exabytes of video. Regarding the challenges, some users are data constrained due to their limited data plans, and our network backbone has limited bandwidth. So we cannot deliver unbounded bytes to improve video quality. Furthermore, the ceiling on playback quality is bounded by the upload quality. Finally, visual quality can have diminishing returns due to physical device limitations. For example, not all devices support efficient codecs to play 1080p at 60fps.

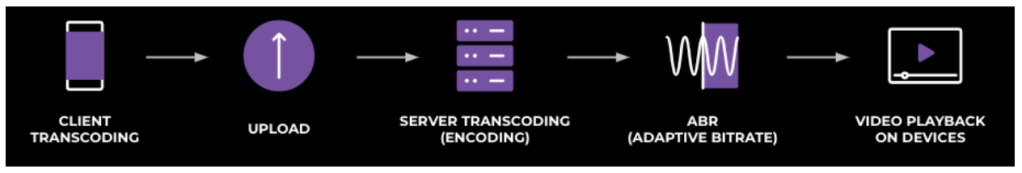

We use an end-to-end approach for quality. “Quality of Experience” is our terminology for representing quality through every stage of our video pipeline—uploading, encoding, and playback—as illustrated above in Figure 1. For example, in playback, this means initial playback success, latency to playback, and rebuffering while playing the video. We specifically utilize an adaptive bitrate mechanism to balance video-playback quality and network bandwidth. The adaptive bitrate algorithm is a risk-reward algorithm.

Let’s consider a simplified example in which a video has three encoding lanes, specifically:

- Lower quality at 1mbps.

- Moderate quality at 2mbps.

- Higher quality at 3mbps.

Assume we’re in the middle of a video. As illustrated in Figure 2, the first segment has been played, the second segment has been downloaded and buffered, and the last segment is not yet downloaded. For the not-yet-downloaded segment, we need to estimate the available bandwidth and choose an encoding lane. We want to minimize the likelihood of encountering a video-playback stall due to downloading—that spinning circle that makes a user wait instead of playing a video. We also want to maximize playback quality, which generally means being as close to the upload quality as the device supports. In practice, this adaptive bitrate approach has delivered improvements in user engagement due to playback quality while reducing network bandwidth costs.

Future opportunities

As we continue to seek and invest in sustainable approaches to quality, we find that we require a change in development culture. Accountability and ownership are critical. At Meta, all employees are expected to actively use the pre-production versions of the app they work on, report bugs, and fix bugs. Ultimately, we want to minimize the leak-through rate from testing, verification, and dogfooding (i.e., the practice in which tech workers use their own products consistently to see how well they work and where improvements can be made).

In addition, we achieve scale through automation and tooling. This requires full life-cycle support for regression management. This includes detection, escalation, remediation, and prevention. Developers and product teams are empowered through automated root-cause analysis workflows and code, configuration, and ML-model, safety-rollout mechanisms. We also need learning processes to build resilience systems. With that in mind, I lead the Facebook post-mortem process for analyzing major outages and fixing systemic issues.

Another opportunity–and a focus here at Meta—is building an ecosystem of quality. We believe our mobile infra should be highly performant and reliable out of the box. This includes our internal and open-source frameworks. Next, our partnerships are critical. We also integrate telemetry from Google Play and Apple Diagnostics, which has been incredibly useful for fixing performance and reliability issues.

Users care about quality. Performance and reliability are high-stakes expectations for users and continue to be a Meta priority.